Teleman

Great, thanks a lot for taking the time to go through this! It will be an outstanding contribution to the system!

but I'm afraid it is currently not giving correct results. These type of algorithms are really delicate, so when attempting a translation like this it is important to double check the results at every "checkpoint" (in this case we are using the intermediate plots as checkpoints).

The idea is to compare the output of the python script with the one from the matlab script at each one of the checkpoints.

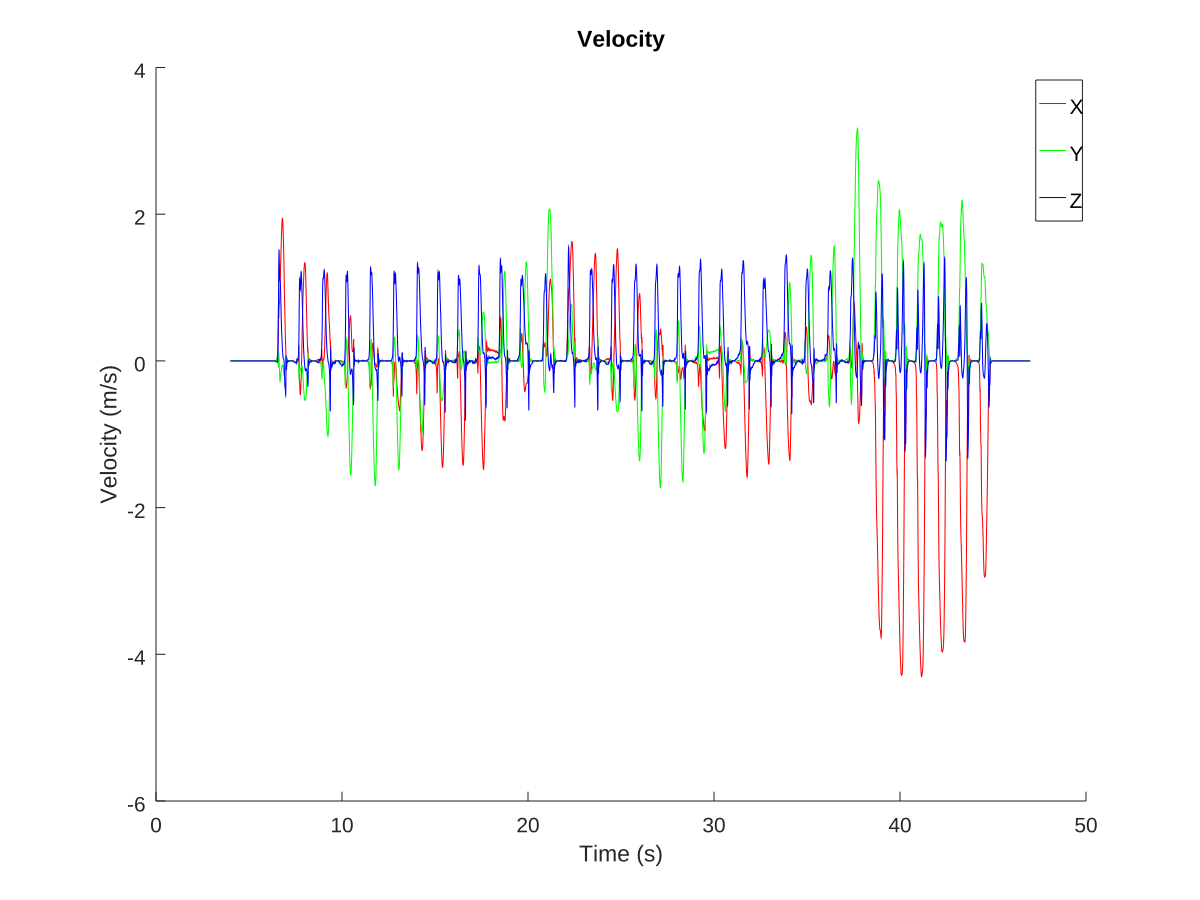

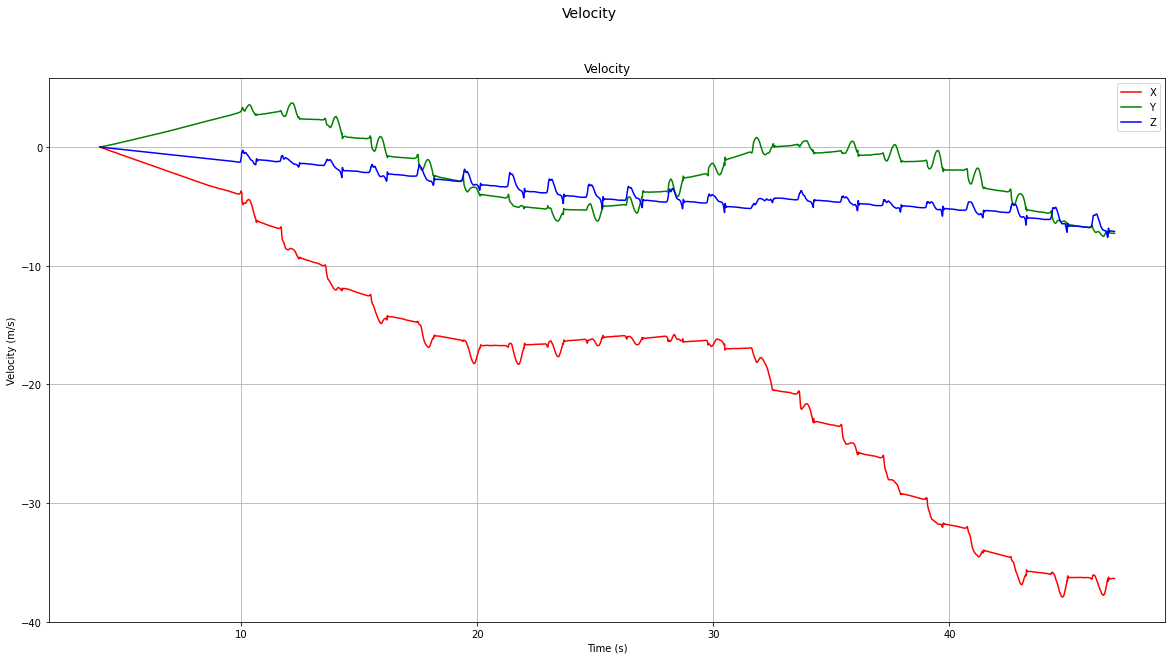

To take a significant one as example: comparing the translational velocities plot give completely different results. Note how the resulting velocity on all three axis remains stable at zero on the matlab plot, while it drifts notoriously on the python one.

matlab translational velocities

python translational velocities

If you go further and compare the translational position plot (not pasted here) you will see how the delta in position is no greater than 10mts, while in the python one there's a delta in position of about 500mts!

I would suggest to take a step backwards and translate it one step at the time, making sure the partial outputs match.

For example, in the first checkpoint Plot data raw sensor data and stationary periods the important thing to get right are the stationary periods, which in the matlab script get plotted on the accelerometer figure. On the python script on this step there's only the gyroscope figure plotted, which doesn't give you much information.