Hi,

The FBX format should also contain the original rest pose, but I don't know how can you retrieve it inside Houdini.

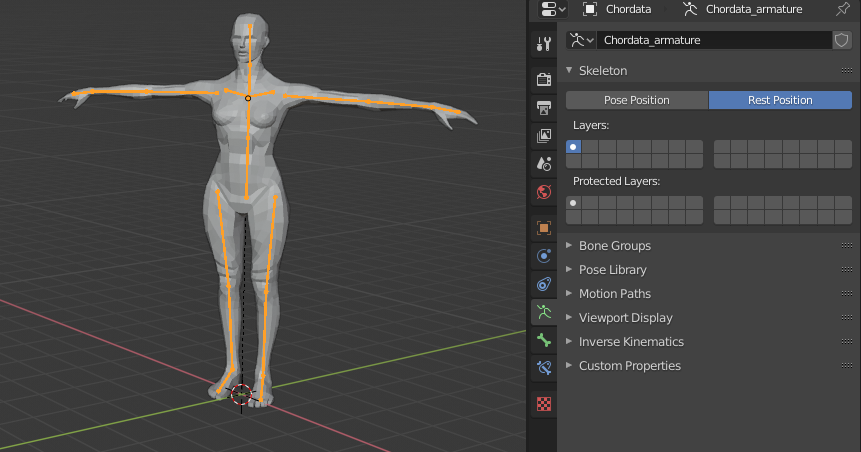

If you need to export a static FBX with only the T-POSE you can select [Rest Position] inside Blender properties panel > Armature data Tab > Skeleton. (The armature object should be selected, like in the picture)

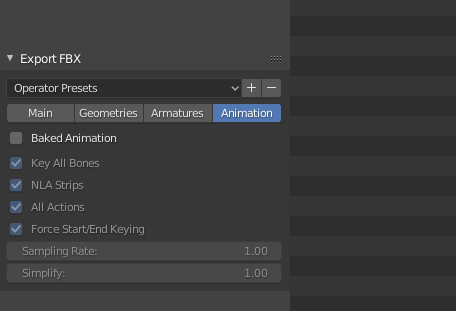

Then export without the "baked animation option" found on the Export options at the lower left of the FBX export screen

sggfx Also regarding the mocap data, it appears as though the animation is pinned to a center point, I think I read something about it in another thread too. Is this a limitation of this type of motion capture?

It's not a limitation of the inertial type of capture. It's just that other technologies can provide a much more accurate translation capture.

We just didn't have enough time to complete the implementation the algorithm that can calculate translation yet. You can follow the state of the development in this thread. If the contributors are not able to take care of that sooner the ground animation feature should be available together with our official release.

We will also provide hooks to receive absolute translation information coming from external devices such as commercially available VR trackers. That way users interested in obtaining a more precise translation will be able to integrate them